Pretraining of deep CNN autoencoders

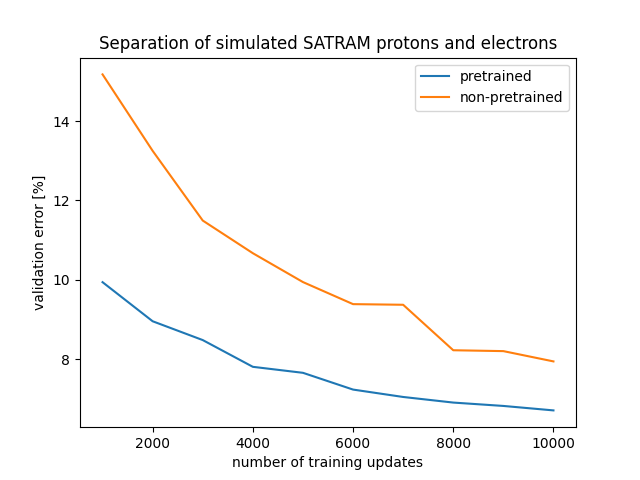

Autoencoders pretrained on unlabeled data from Timepix3 to improve accuracy.

Goal

The goal of the project is to show how pretraining autoencoders based on CNNs can be used to learn feature extraction and subsequently finetune for specific task. This page was made to showcase some of the figures relevant to the project.

Model

We have chosen the EfficientNetB4 as our model architecture of the encoder. For the decoder, we transpose the architecture of the encoder. Additionally, we attach multiple heads to the model -

- First head predicts the image on the input

- Second head predicts binary mask of the whole cluster

- Third head predict the binary mask of pixels above fixed threashold

Variations

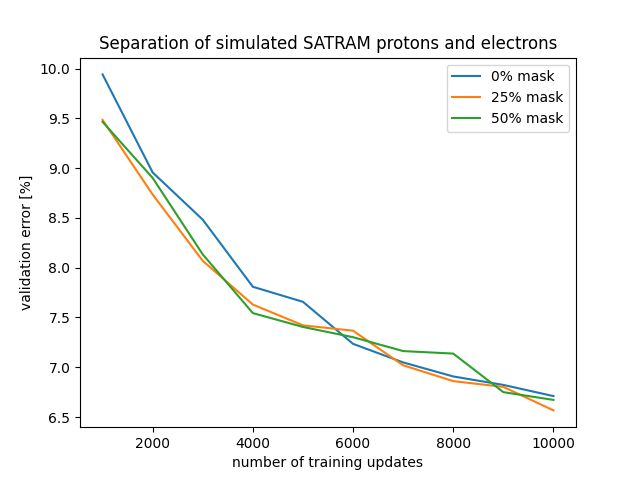

- Different levels of input pixel masking were tried (0%, 25% and 50%)

- Variations with or without binary mask head were tested

Input

As input to the model we use 3 channel image of size 384x384, which is upsampled image of the cluster (up to 5x size increase in each dimension).

- First chanel is dedicated to deposited energy for each pixel.

- Second channel is for the time of arrival of each pixel

- Third channel contains binary map of the pixels above certain energy threshold Data is normalized using sqare-root normalization.

Training

Pretraining

The pretraining has two stages :

-

Standard pretraining on Imagenet dataset - we simply download the pretrained weights from for this torchvision model.

-

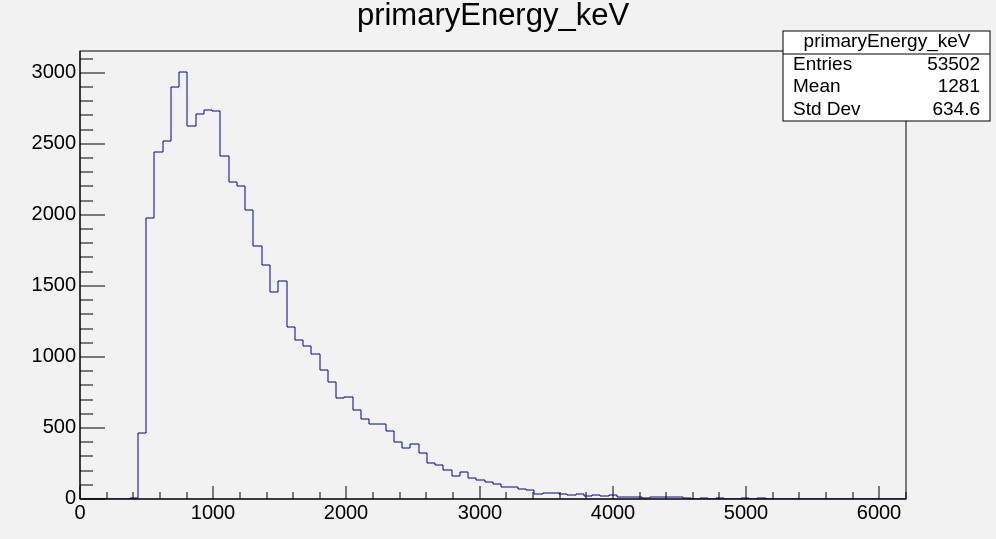

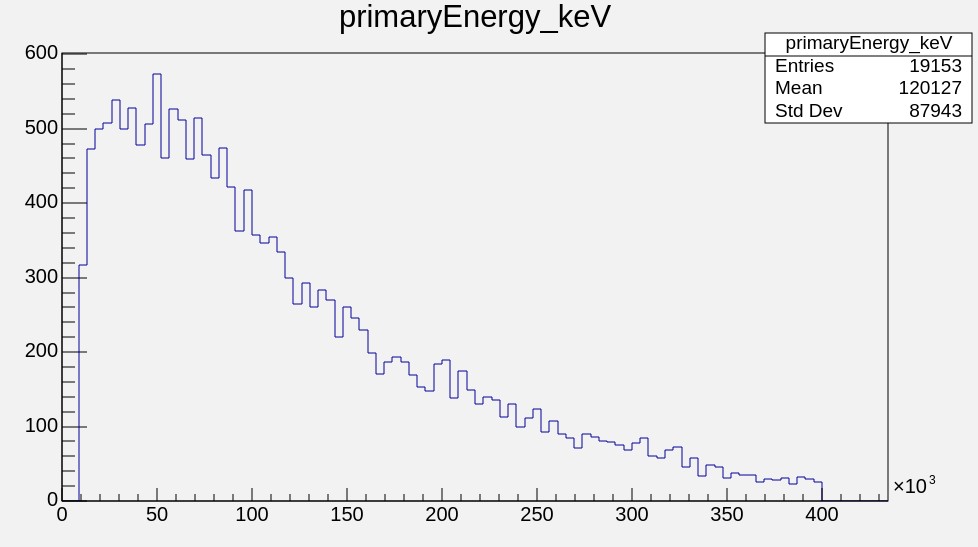

Part of the data for unsupervised pretraining is obtained from Timepix3 measurements in ATLAS. This dataset contains high variability of clusters but smaller clusters are much more frequent. This is the reason why we also used ions data obtained at test beam measurements of Pb at SPS at Cern - these have higher frequency of large clusters.

Dataset is balanced into exponentially sized bins based on cluster hit count. We then downsample the clusters so that the frequency across the bins is unfiorm.

What happens, if we do not use binary pixel masking?

Suddenly, the thin tracks become more blurry as we can see below.

Key observations:

- addition of binary mask predicition non-trivially increased the sharpness of the recontructed image

- we were able to reconstruct simple, linear tracks with high precision

- heavy ions were reconstructed too but reconstructing areas with high density of delta electrons ended up blurred

Supervised finetuning

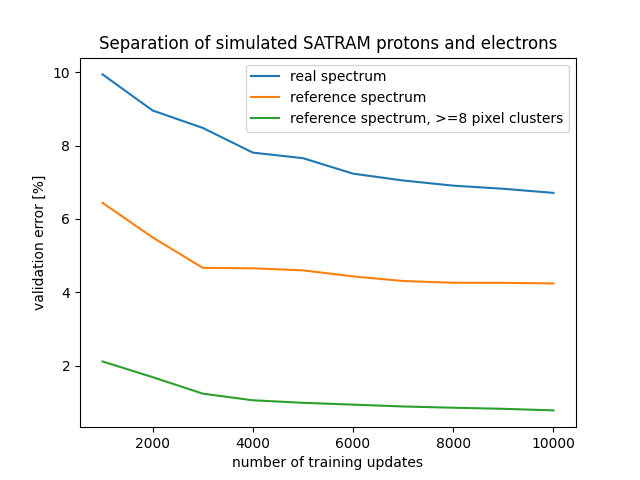

Data for supervised finetuning is obtained from simulations. For instance, we simulate protons and electrons for SATRAM experiment with Timepix1. Currently, there is a lack of standardized benchmarking datasets for this type of detectors, so besides simulation of real spectrum for SATRAM instrument, we also created identical spectrum to the one mentioned in this paper , further referred to as “reference spectrum” as opposed to the original “real spectrum”.

Validation

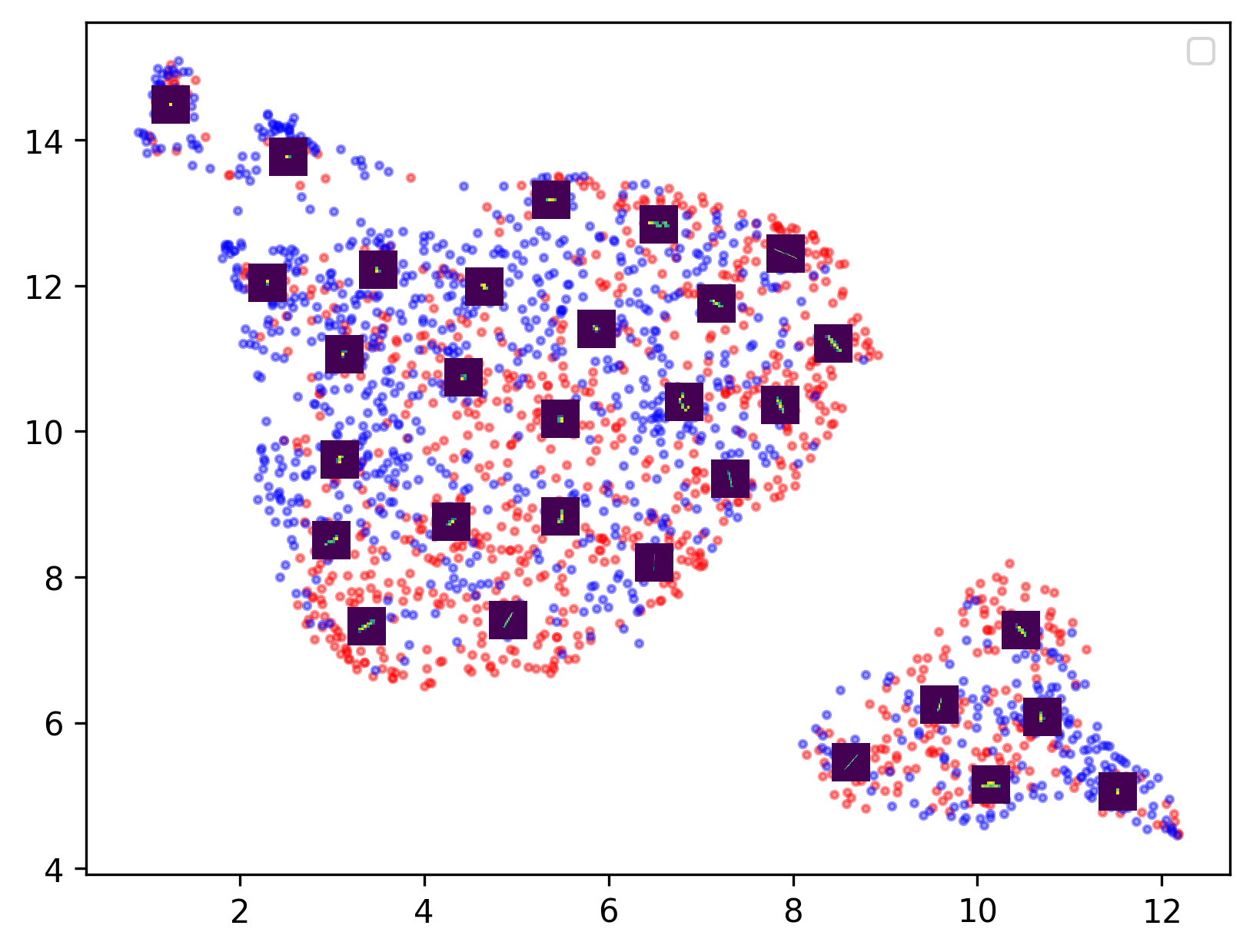

Separated protons and electron feature vectors

Key observations

- two of the most important extracted features by the model were track length and angle of the track

- by using the pretrained model, we were able to improve the separation of protons and electrons on reference spectrum from reported 94.79% to our 96.2%

- we have shown that most of the errors are caused by lack of information (tracks smaller than 8 pixels), after filtering those we achieved >99% accuracy on a balanced dataset